In the early days of enterprise AI, adoption was tightly controlled by centralized CoEs or dedicated data science teams, with little direct involvement from business units. These groups managed everything, from data pipelines to model deployment, effectively operating as gatekeepers, while business users remained end consumers of analytics and insights. This was followed by skill development, with training focusing almost exclusively on data scientists, AI/ML engineers, and IT staff, widening the AI skills gap across the organization. Attempts at democratization were limited to low-code RPA for basic automation, but true citizen AI development, such as building or applying models, was virtually non-existent. Even with the introduction of AutoML tools, access remained confined to specialist teams, reinforcing a technical bottleneck. This setup restricted AI’s business potential, slowed innovation, and left large parts of the organization unable to leverage intelligent technologies effectively. With the growing integration of AI in consumer technology through platforms such as ChatGPT, Gemini, and Perplexity, many employees have observed a notable disparity in the availability and sophistication of AI tools between their personal and professional environments.

The Evolution of Enterprise Tech Democratization: From Rule-based Tools to Autonomous Agents

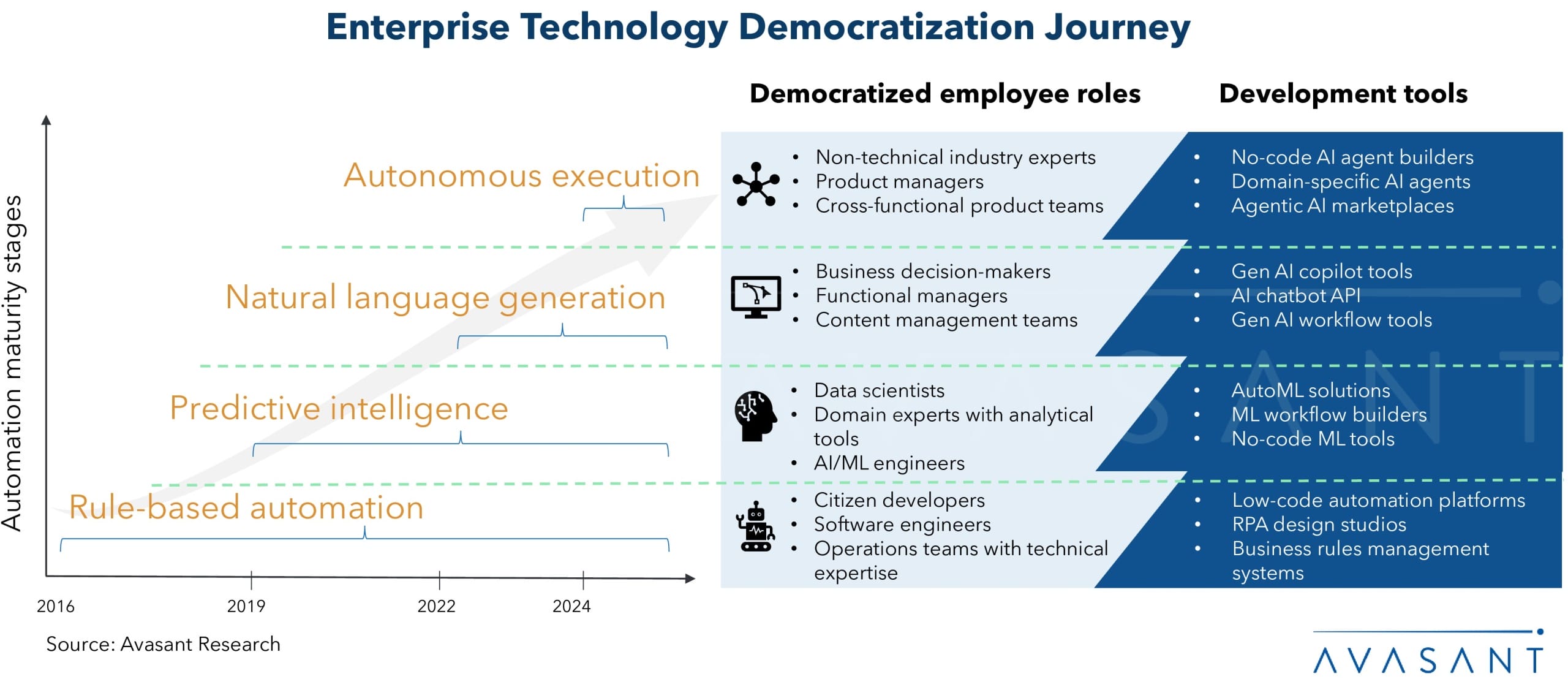

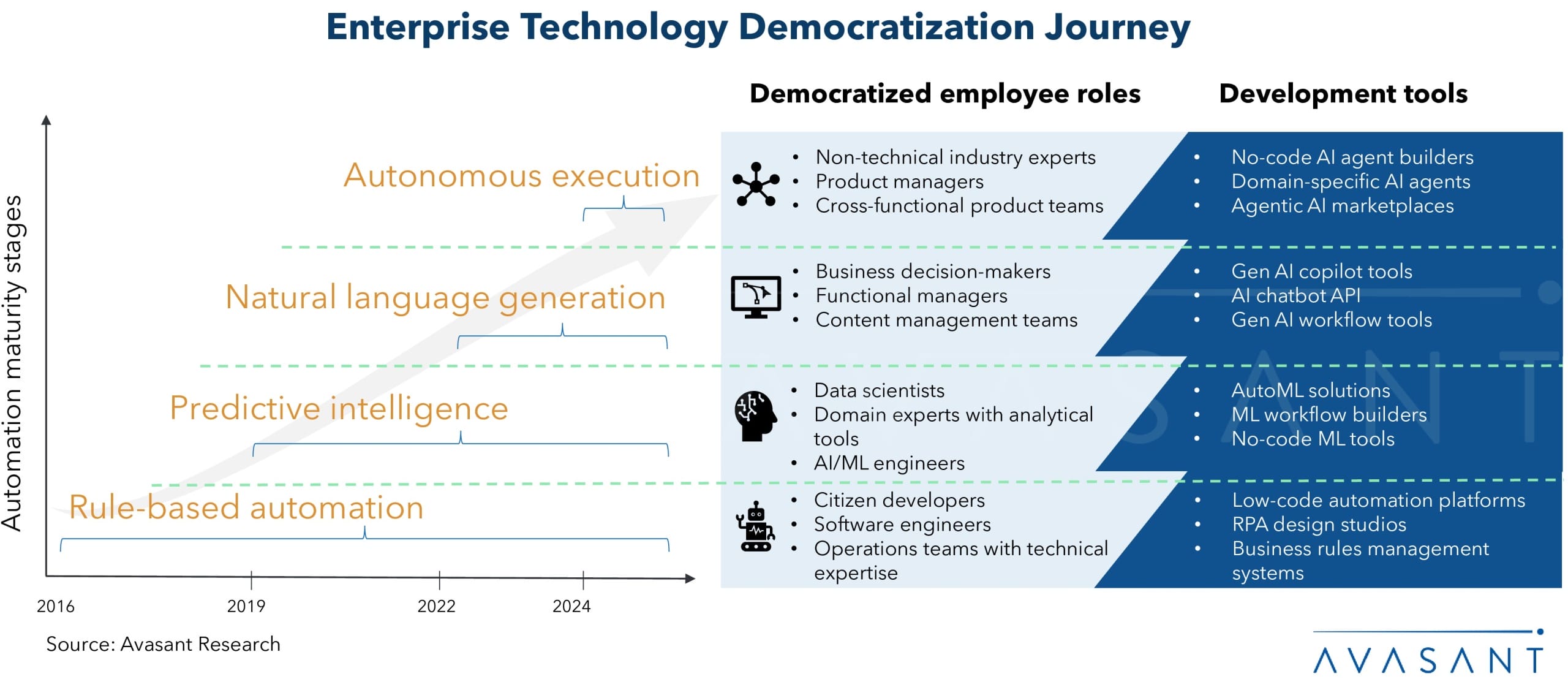

Technology democratization in enterprises has evolved far beyond giving nontechnical users access to basic automation tools. What began with exposing configurable rule-based systems to business users has now progressed to empowering them to design entire workflows through autonomous agents, without writing a single line of code.

In the early days, RPA allowed frontline employees to adjust predefined logic for repetitive tasks. Then came the rise of traditional AI and AutoML platforms, where data scientists abstracted the complexity of modeling into templates that analysts and risk officers could experiment with, although deployment still required centralized oversight.

The emergence of generative AI (Gen AI) was a game-changer. With natural language as the interface, functional teams such as marketing, HR, and operations could directly query models, summarize content, and generate insights, expanding access across departments. But it did not stop there.

Now, with agentic AI, we are witnessing the next leap: users no longer merely interact with a model; they can configure toolchains, define task logic, and delegate multistep goals to autonomous digital agents. This marks a fundamental shift from task-based execution to goal-oriented orchestration, heightening the need for embedded governance, real-time observability, and built-in fail-safes.

According to Avasant’s Generative AI Strategy, Spending, and Adoption Metrics report, enterprise AI budgets are increasingly prioritizing the development of AI-ready talent. The emphasis has shifted toward empowering employees, not just to overcome the fears of using this disruptive tech, but to actively leverage it for exponential productivity gains.

Intesa Sanpaolo, a leading European bank, exemplifies this approach. It has consistently democratized access to emerging technologies in step with its evolution. The following case study outlines this journey across four key phases, highlighting how AI capabilities have steadily moved closer to the business user.

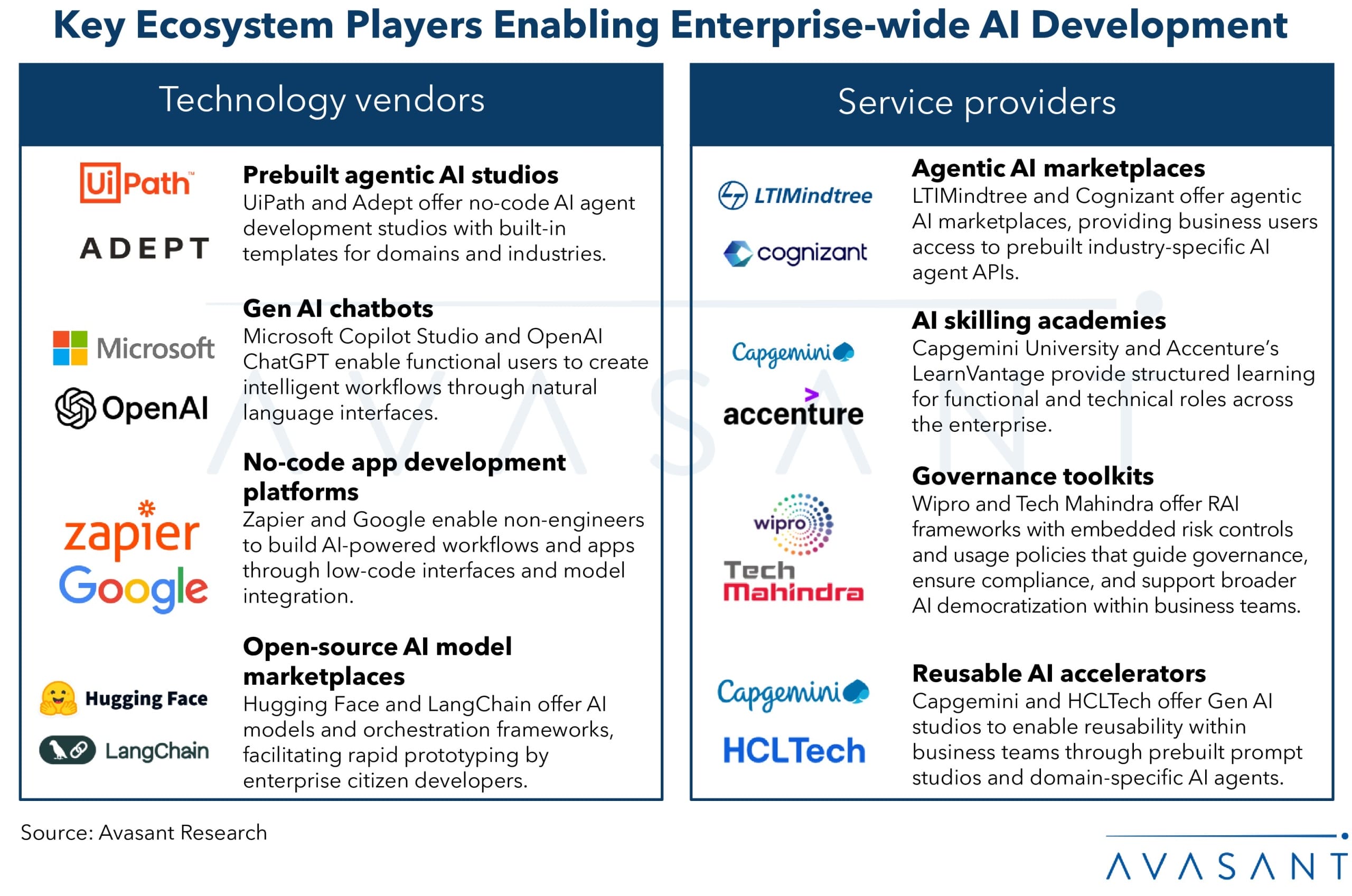

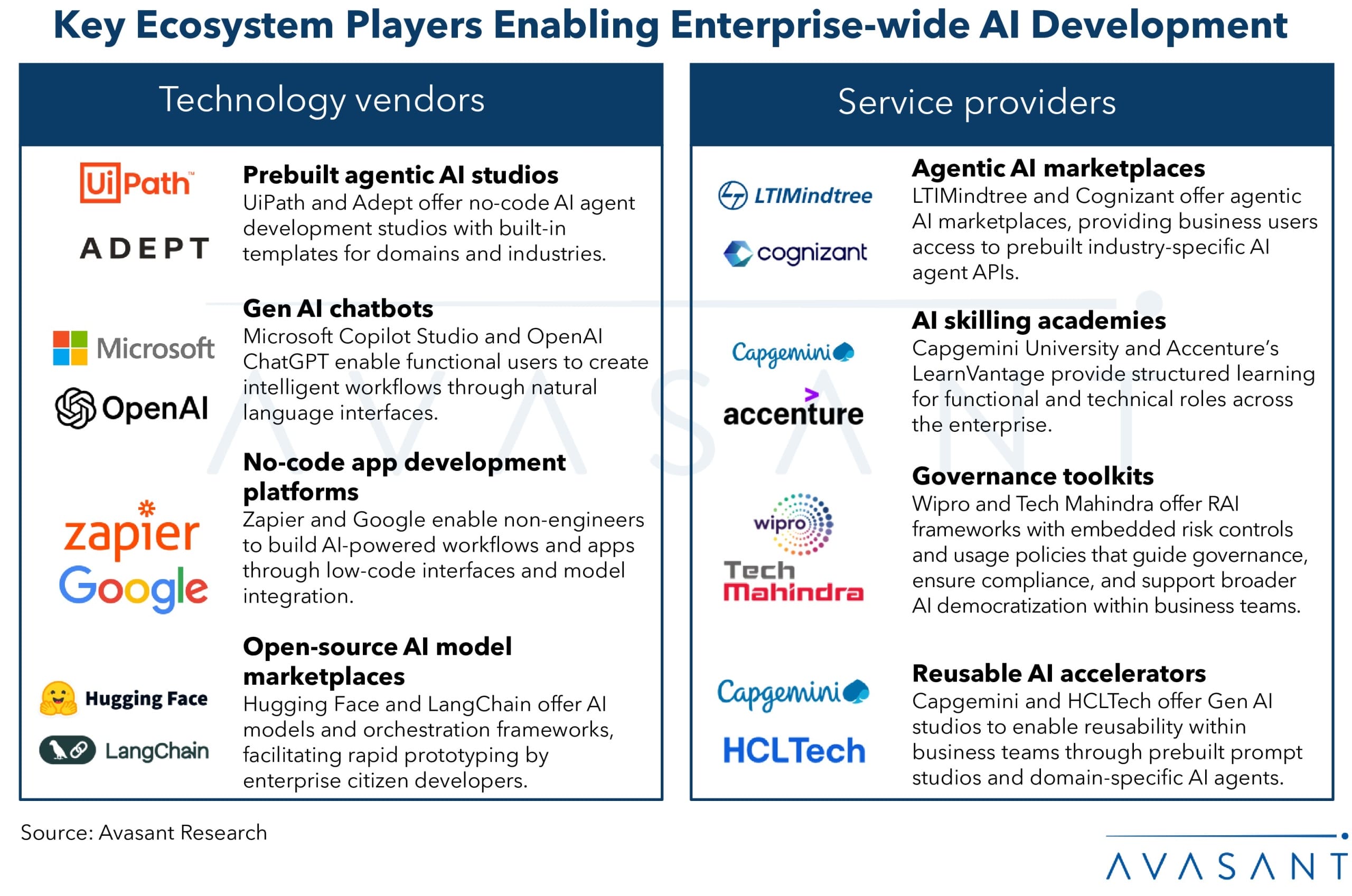

While the push toward democratizing AI is gaining momentum, it is driven by complementary efforts from both technology vendors and service providers. Vendors are simplifying access through no-code tools, open-source models, and generative interfaces, while service providers are enabling enterprise adoption through skilling academies, responsible AI governance, and prebuilt marketplaces of reusable, domain-specific AI agents.

Together, these ecosystems are lowering the barriers for business users to codevelop, fine-tune, and safely deploy AI, marking a shift from centralized innovation to distributed, domain-led AI ownership across the enterprise.

With these increasing investments, enterprises are under increasing pressure to adopt a structured approach to advancing AI. As outlined in Avasant’s AI Value Realization Framework, enterprises must focus on the entire value chain to identify, measure, and track AI initiatives and realize true business value from their investments.

Recommendations for Enterprise Leaders

The shift from task automation to autonomous orchestration signals a fundamental redefinition of enterprise user engagement with AI. As the locus of decision-making moves from data teams to domain teams, enterprises must invest in fine-grained controls, explainability layers, and robust fallback protocols.

-

- Implement fine-grained controls at the configuration and execution layer

Enterprises must establish tiered permissions and policy-driven controls that define who can configure, deploy, and monitor AI agents and what types of actions those agents can perform. To prevent misuse or runaway automation, business-specific constraints (for instance, approval thresholds and data access rules) must be integrated directly into agent workflows.

Why it matters: Agentic AI systems are capable of autonomous decision-making. Without granular controls, the risk of compliance violations or unintended actions rises exponentially.

-

- Embed explainability layers across the agent life cycle

Organizations must build AI systems with traceable logic, allowing users and auditors to understand why an agent made a particular decision. They should use LLM explainability tools (for instance, prompt traces and attention visualizers) and traditional model interpretability methods (for instance, SHAP and LIME) to maintain transparency throughout the agent’s planning and execution process.

Why it matters: In regulated or customer-facing industries, unexplained or opaque outcomes can erode trust and result in legal or reputational risk. Explainability is also critical for ongoing performance tuning.

- Design robust fallback protocols for operational resilience

Companies must ensure every autonomous agent or Gen AI system has a clearly defined rollback path, whether it is a human-in-the-loop handoff, auto-escalation to an SME, or reversion to rule-based systems. Fallbacks should trigger based on confidence thresholds, system anomalies, or user overrides.

Why it matters: Even well-trained agents will encounter edge cases or degraded performance. Enterprises must ensure continuity, especially in high-impact workflows such as finance, customer service, or compliance.

As enterprises embrace the power and promise of agentic AI, it is easy to get swept up in the magic of autonomous workflows, hyperproductivity, and zero-latency operations. But the reality is that AI does not eliminate complexity; it just moves it—from the user interface to the system architecture, from the helpdesk ticket to the governance board.

The winners in this era will not be the ones who build the most agents but the ones who build the smartest fences. Organizations must design AI ecosystems that scale innovation without scaling liability and empower every employee without outsourcing accountability to a black box.

By Chandrika Dutt, Research Director, Avasant, and Abhisekh Satapathy, Principal Analyst, Avasant