The AI landscape is evolving faster than most enterprises can keep up. With over 20,000 large and small language models cataloged across platforms such as Hugging Face and arXiv, and new ones launching daily with promises of outperforming their predecessors, enterprises are already grappling with a key question: Which model is right for my business needs? But model selection is just the tip of the iceberg. The development of agentic AI—systems capable of autonomous, multi-step decision-making—is prompting enterprises to reassess their AI priorities, reorganize their technology infrastructures, and refine how they deliver and evaluate impact. While many organizations were early movers in adopting generative AI (Gen AI), a significant number are yet to realize a tangible ROI. Based on our ongoing conversations with leading service providers, at an aggregate level, only 20% of Gen AI and agentic AI projects have progressed to production, while the rest are stuck in proof-of-concept (POC) or pilot stages.

Why? It often boils down to:

-

- Chasing hype instead of use case fit

-

- Lack of consistent benchmarks for productivity gains

-

- And increasingly, change management challenges

Even when pilots show promising results, business leaders from HR to marketing are asking difficult but necessary questions. “What happens to my team?”

“If the agent fails in real-world operations, who bears the responsibility?”

“How do I balance automation with workforce trust and continuity?”

At Avasant, we believe that successful AI adoption is no longer about tech-first decisions. It is about strategic orchestration across opportunity discovery, cross-functional alignment, and employee engagement and readiness. Because deploying agents is not the endgame, institutionalizing their value is.

Rethinking AI Prioritization: Legacy Approaches Fall Short

Traditional enterprise approaches to AI initiative prioritization have largely centered around proven but narrowly scoped methods. Organizations have often relied on ROI-based filtering, feasibility versus impact scoring, or function-specific prioritization focusing on departments such as marketing, finance, or supply chain. Sometimes, choices were driven by technology-first enthusiasm or experimentation-led models, especially during the early AI adoption phases.

These methods offered clear short-term advantages. They enabled quick delivery of measurable business outcomes, fostered early internal buy-in, and helped build initial organizational experience with AI systems. However, these traditional approaches fall short as enterprises scale their AI ambitions. AI initiatives often remain siloed across departments, preventing synergy across use cases. Valuable models and data assets are not reused, leading to duplicated efforts and missed efficiency gains. Most critically, these approaches lack mechanisms to support long-term scalability and drive enterprise-wide impact. They also tend to underestimate emerging risks—particularly around regulatory compliance, model drift, and ethical AI considerations—leaving enterprises vulnerable as scrutiny around AI intensifies. A more integrated, strategic approach is now essential to unlock enterprise-wide AI value and mitigate risk effectively.

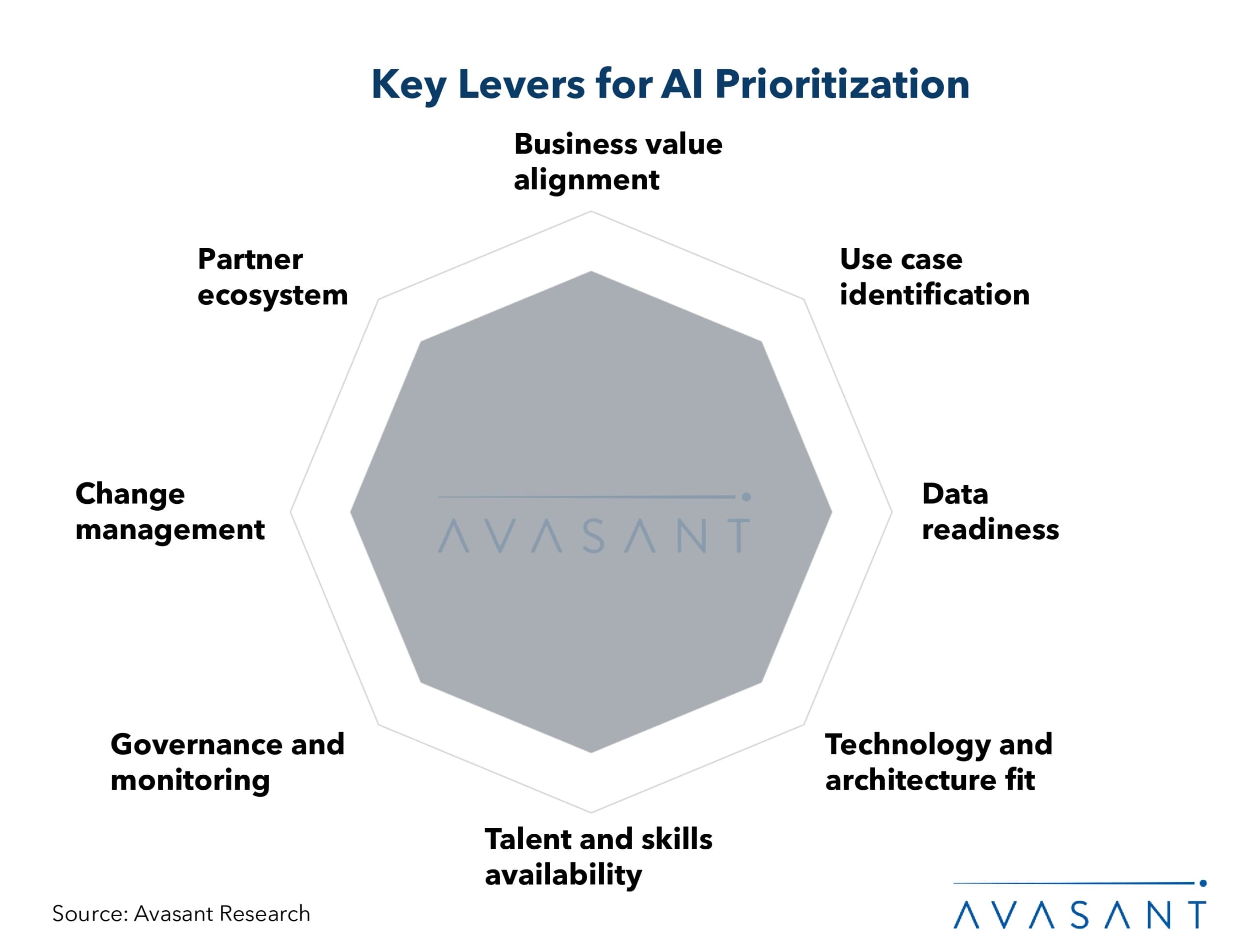

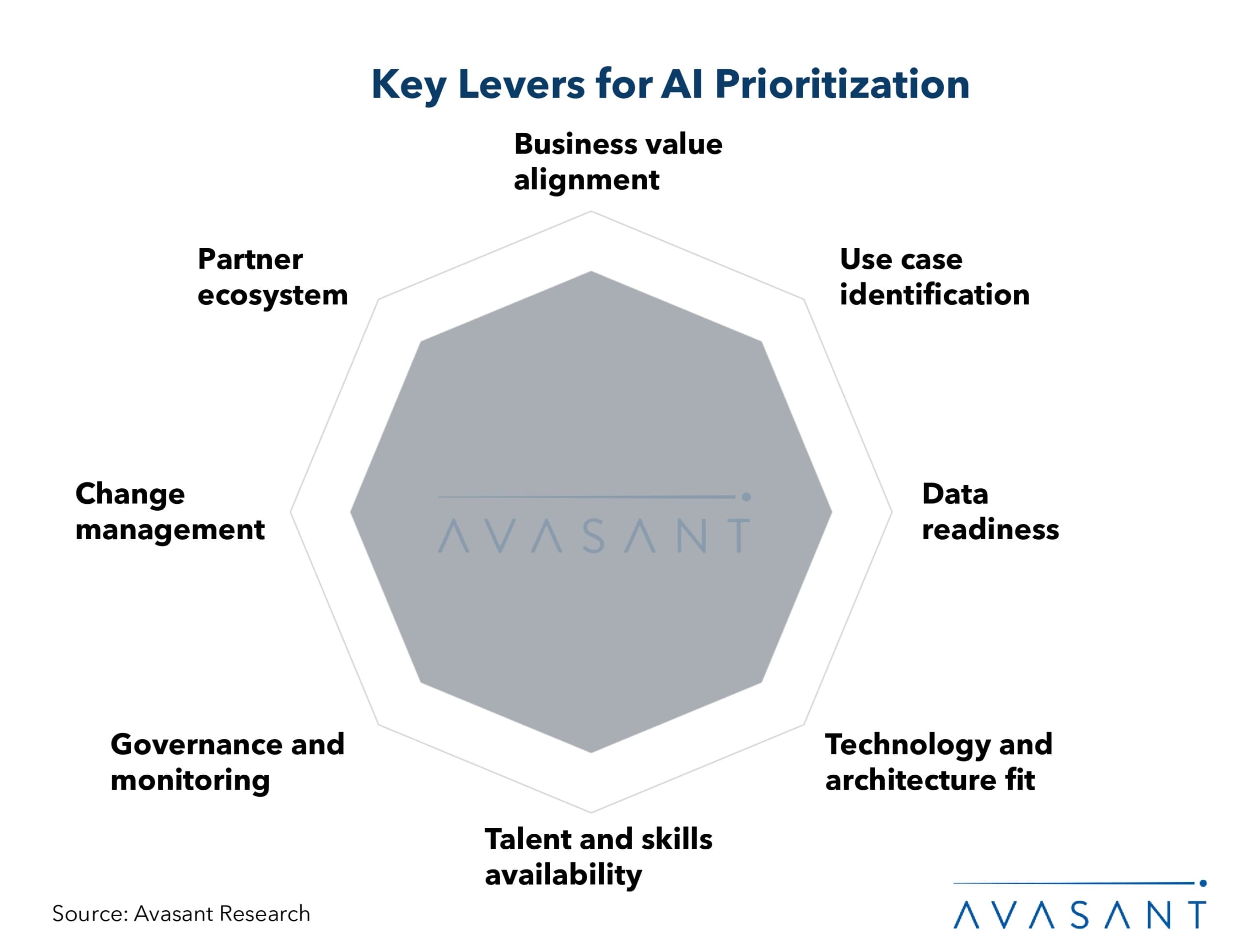

Avasant’s AI prioritization dimensions encompass the multifaceted nature of decision-making when it comes to sequencing and scaling AI initiatives within an enterprise. Unlike traditional methods that may rely heavily on ROI or experimentation alone, our framework encourages a holistic evaluation across eight critical dimensions.

At its core lies business value alignment, ensuring that AI efforts directly contribute to strategic goals such as productivity/efficiency, revenue expansion, or new revenue streams rather than being siloed experiments. Equally important is use case identification, which moves beyond hype to focus on practical, high-impact scenarios tailored to the organization’s industry and operational context. Data readiness serves as the foundation. Without high-quality, transparent data, even the most advanced models will fail to deliver value.

Technology and architecture fit help avoid workflow integration pitfalls by aligning AI solutions with the enterprise’s digital infrastructure. Meanwhile, talent and skills availability ensure that internal capabilities are equipped to develop, deploy, and govern AI systems. Governance and monitoring are no longer optional but central to mitigating risks around security, privacy, transparency, and compliance, especially in light of evolving global AI regulations.

Often underestimated, change management becomes the glue that binds AI adoption with organizational acceptance, ensuring workflows, roles, and mindsets evolve accordingly. Lastly, the partner ecosystem dimension reflects the growing importance of external collaboration with startups, academic institutions, hyperscalers, and domain specialists, accelerating innovation while reducing in-house burden.

This balanced, multidimensional approach helps CAIOs and CTOs avoid the trap of narrow prioritization and instead chart a path toward scalable, responsible, and business-aligned AI transformation.

Elevating AI Strategy with Value-Centric Frameworks

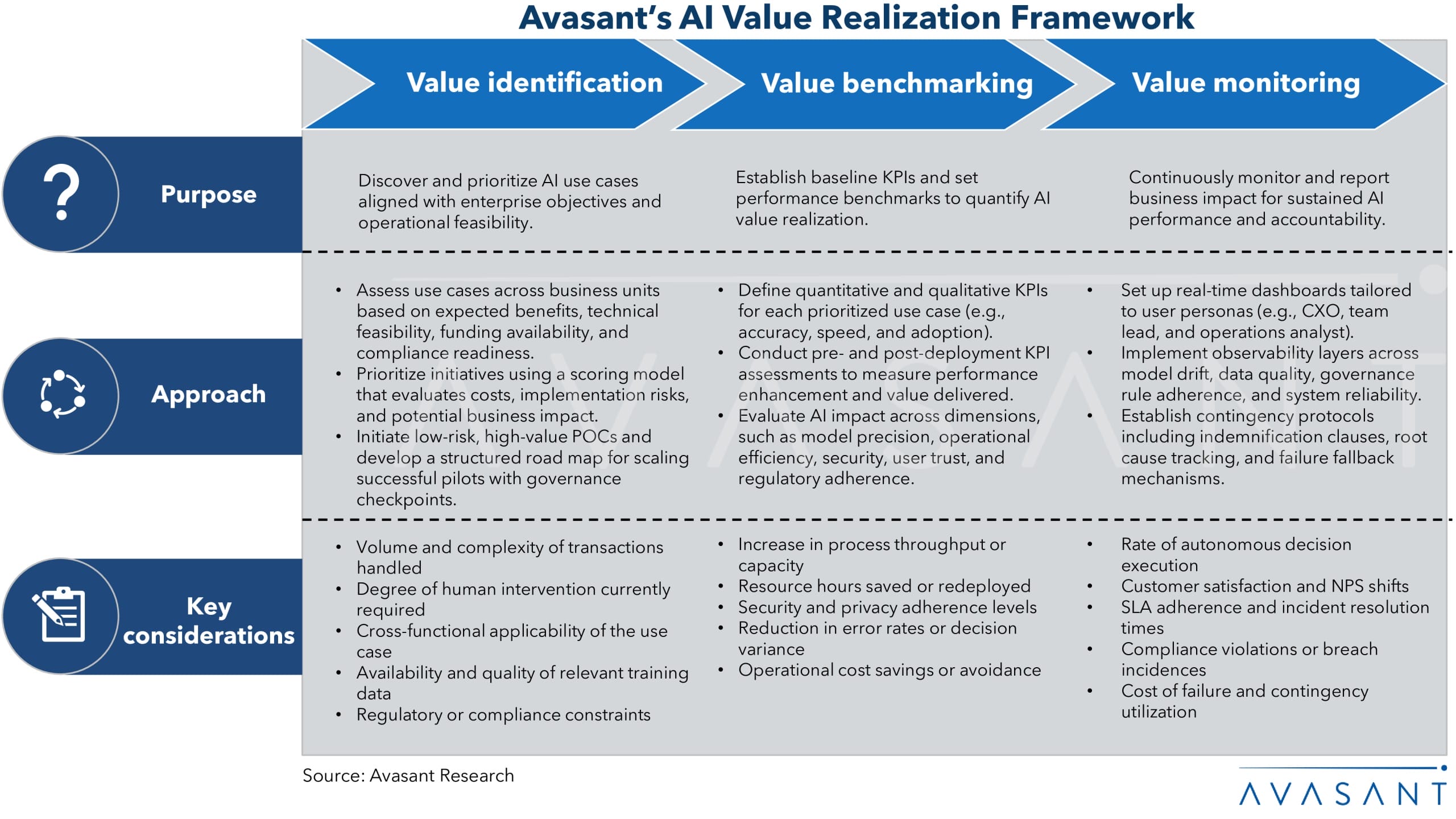

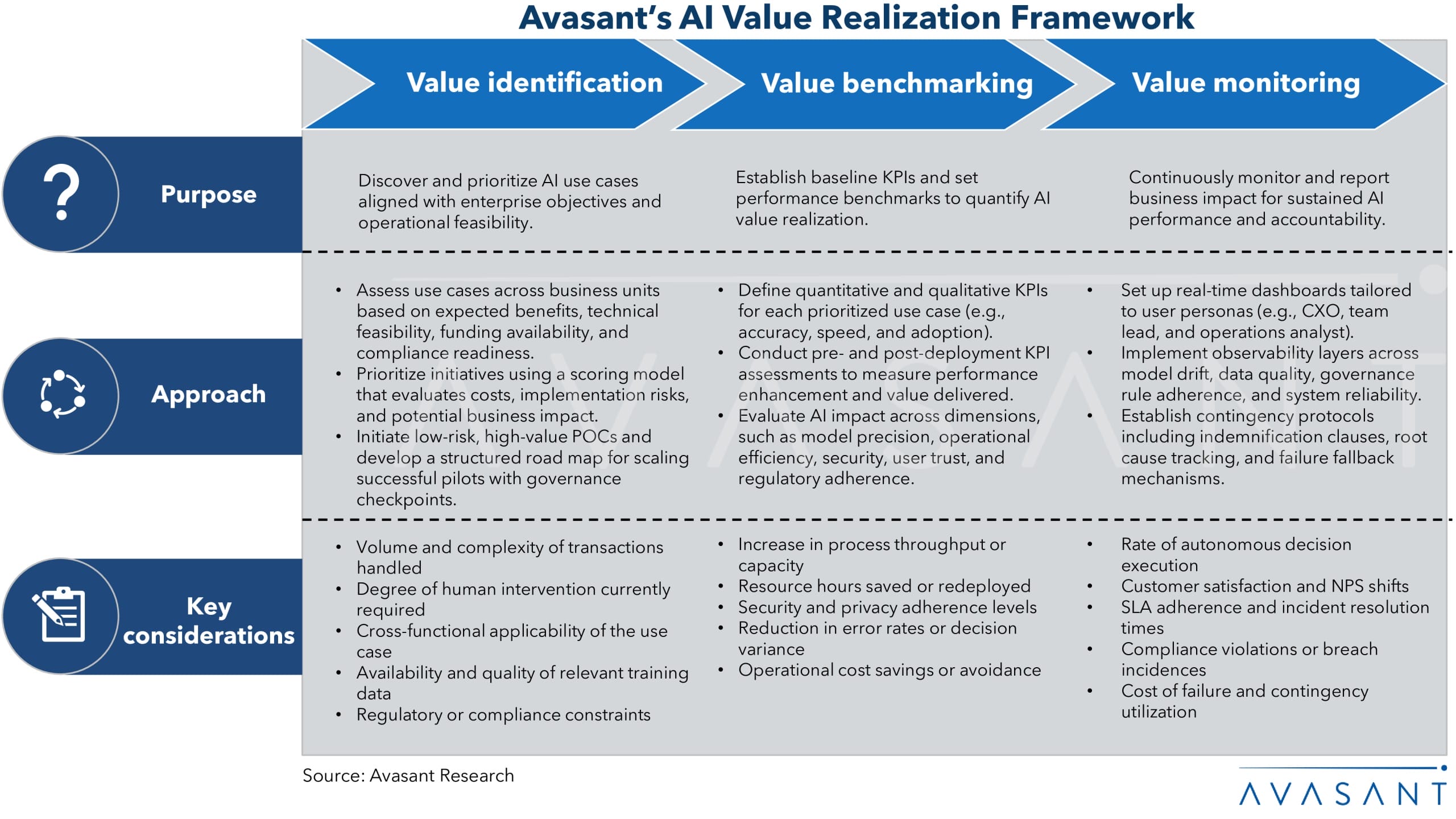

An AI value realization framework enables enterprises to drive successful business outcomes and ensure fast-track AI scalability across the organization. This framework helps organizations move from experimentation to enterprise-wide AI value realization that is measurable, resilient, and aligned with the organization’s strategic goals.

Avasant’s three-phase AI value realization framework moves beyond one-time ROI calculations to drive sustained, enterprise-wide impact. Systematically identifying, benchmarking, and monitoring AI-driven business value provides a holistic and measurable path to scale strategic AI initiatives.

-

- In the value identification phase, enterprises prioritize use cases based on feasibility, risk, and tangible benefits, ensuring early wins while building a road map for scale. This is not just about technical readiness; this phase considers process frequency, data maturity, and cross-functional relevance to surface the most actionable opportunities.

-

- Value benchmarking establishes clear KPIs upfront, covering accuracy, turnaround time, security, and user adoption to objectively quantify impact. Rather than vague outcomes, this phase ties AI performance directly to business levers and operational standards.

-

- Value monitoring equips leadership with real-time dashboards and observability mechanisms that track efficiency, model drift, and user behavior post-deployment. With fallback protocols and governance baked in, enterprises gain confidence in AI’s reliability at scale.

Key Recommendations for Enterprise Leaders

Successful AI adoption demands a balanced strategy that integrates engineering rigor with business accountability and social responsibility. This involves rethinking performance metrics, partner engagement, and risk allocation in contracts. The following recommendations outline how forward-looking enterprises can build scalable, resilient AI programs grounded in outcomes and protected against potential liabilities.

-

- Retire outdated KPIs to measure real business impact: Many enterprises continue to assess AI initiatives using legacy performance metrics that fail to capture AI’s dynamic, probabilistic, and iterative nature. Replace these with new, KPIs centered on AI performance and adoption, focusing on metrics such as model drift impact, cost-to-value ratio, user adoption velocity, and accuracy-to-risk balance. For instance, instead of measuring uptime alone, assess decision precision and compliance alignment over time.

- Adopt outcome-based models for high-value use cases: In areas with substantial business upside and definable outcomes, such as fraud detection, claims processing, and personalized healthcare, enterprises should co-invest with ecosystem partners through outcome-based models. This not only aligns incentives but also distributes both value and risk. For example, in AI-led medical imaging diagnostics, shared accountability between provider and enterprise can accelerate deployment while containing liability.

- Strengthen contractual provisions and operating models to offset high-exposure risks: As AI agents increasingly handle sensitive or customer-facing tasks, working with third parties requires including provisions for proactive risk mitigation. Service providers now include indemnity pricing in contracts, depending on the level of financial or regulatory risk associated with the AI agent. Enterprises should push for tailored indemnity clauses, especially when deploying AI in high-impact zones, such as customer service automation and financial recommendations, and treat indemnity as a strategic control, not just a legal safeguard.

As enterprises scale AI investments, success will hinge not just on the right talent or AI model but on consistent value realization. Adopting a robust AI value realization framework enables CAIOs and CTOs to connect strategic intent with measurable outcomes, providing a structured approach to prioritize initiatives, align stakeholders, and monitor impact over time. This shift from fragmented experimentation to disciplined orchestration is essential for turning AI ambition into sustained business value.

By Chandrika Dutt, Research Director, Avasant, and Abhisekh Satapathy, Principal Analyst, Avasant