In the current era, responsible AI has become a cornerstone for sustainable innovation, as the rapid proliferation of AI technologies intersects with societal, ethical, and regulatory concerns. Organizations are under increasing pressure to ensure that AI systems are fair, transparent, and inclusive, addressing risks like algorithmic bias, privacy breaches, and unintended misuse.

Governments and enterprises are emphasizing frameworks such as explainability, continuous monitoring, and robust data governance to build trust and accountability in AI applications. With global initiatives such as the EU AI Act, UNESCO’s ethics framework, and industry-led responsible AI toolkits, the focus is shifting from merely deploying AI to deploying it responsibly.

According to the Avasant Generative AI Services Market Insights 2024 report, 48% of enterprises are partnering with cloud and service providers, primarily for responsible AI, industry/domain blueprints, and LLM orchestration expertise. These providers offer robust, responsible AI solutions and guardrails for generative AI, addressing risks such as bias and privacy, hallucinations, cybersecurity, and copyright infringement.

Additionally, they have strong expertise in integrating region-specific AI regulations within client AI systems. This collaborative approach also ensures that AI not only drives economic growth and operational efficiency but also aligns with broader human and societal values, reinforcing its role as a force for equitable and positive impact.

The recent Infosys Topaz Responsible AI Summit 2025, a collaborative effort with the British High Commission, provided a platform to discuss these issues surrounding responsible AI. Held on February 26, 2025, at Infosys’ Bengaluru campus, the summit enabled industry leaders, policymakers, and technologists to converge and discuss the imperative of aligning AI innovation with ethical and regulatory standards.

The Rise of AI Toolkits: Bridging the Governance Gap

As organizations accelerate their AI adoption, they are increasingly grappling with governance, compliance, and implementation challenges. In response, AI toolkits are emerging as indispensable resources. A key highlight of the summit was the launch of the Infosys Responsible AI Toolkit, a comprehensive framework tested with over 100 organizations. This initiative, spearheaded by Infosys, aims to provide practical tools for navigating the complexities of responsible AI implementation. Available on GitHub, the toolkit underscores Infosys’ commitment to fostering innovation while upholding ethical AI practices.

Global Collaboration: A Cornerstone for Ethical AI

The summit emphasized that the evolution of AI into a global asset necessitates shared responsibility for innovation and ethical frameworks. UK Investment minister, Baroness Gustafsson’s keynote address highlighted the unique opportunity for the UK and India to jointly drive AI innovation. She underscored the growing recognition among governments that AI development is no longer optional, citing the UK’s ambitious plans to enhance processing infrastructure and its hosting of the AI Safety Summit 2024.

A recurring theme was the delicate balance between innovation and regulation, with both nations acknowledging the importance of collaborative efforts to ensure AI safety and ethical deployment.

Nandan Nilekani, Infosys’ co-founder and chairman, stressed that development and regulation must progress in tandem. He positioned the Infosys Responsible AI Toolkit as a critical enabler for this dual objective while highlighting the potential for collaborative innovation by leveraging India’s vast talent pool and the UK’s advanced AI research ecosystem.

Real-World Value: Driving Trust and Adoption

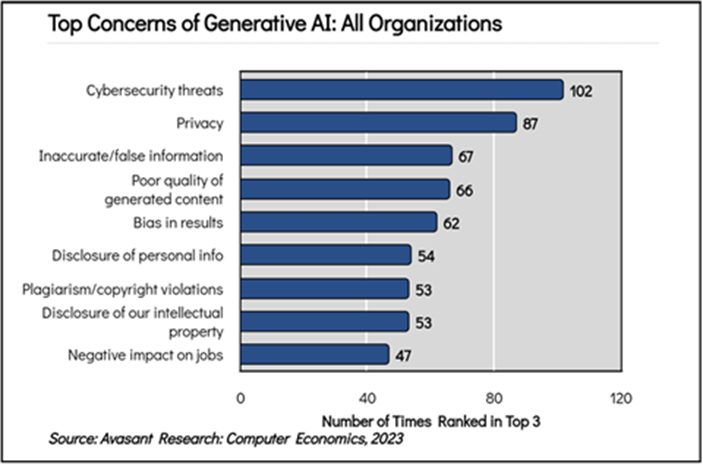

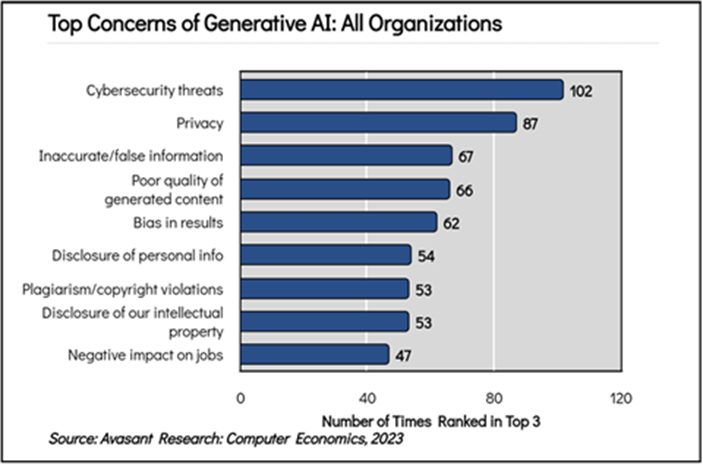

Generative AI is not without its downsides. There are fears around cybersecurity, plagiarism, data loss, and other issues. According to the Avasant Generative AI Strategy, Spending, and Adoption Metrics 2024, cybersecurity threats, privacy risks, false information, and poor quality of content are the top concerns in generative AI adoption.

This brings to focus the argument for light-touch versus heavy regulation to foster innovation while safeguarding consumer data. The panelists at the summit shared examples such as Aadhar usage in India, reinforcing the notion that practical applications of AI, from personalized tools to national identity initiatives, are driving trust and adoption among diverse user bases, proving that real-world value creation is critical for trust and adoption.

A central theme of the summit was the importance of building trust in AI technologies, particularly among non-technical users. The summit also highlighted the role of ethics in AI development.

An ideal approach to responsible AI involves integrating ethics bodies into the ideation and design phases, ensuring explainability and continuous monitoring of AI systems. The summit highlighted the need for global collaboration, exemplified by Infosys’ agreement with UNESCO to adopt its Ethical AI Framework and a partnership with the Karnataka government to integrate the ‘Infosys Responsible AI Toolkit’ into the state’s startup booster kit.

Conclusion: A Collaborative Path Forward

The future of AI development lies in open, inclusive networks that foster innovation across diverse domains, creating sustainable and equitable solutions. There is a need to combine global expertise and foster public-private partnerships, which play a critical role in shaping AI innovation and regulatory frameworks for the future.

The Infosys Topaz Responsible AI Summit 2025 emphasized the need for collaboration between governments, industry, and academia to ensure responsible AI development.

As AI continues to evolve, it is crucial to balance technological advancement with societal well-being, underscoring the need to create AI systems that are not only innovative but also trustworthy, transparent, and beneficial to all. With initiatives such as its Responsible AI Toolkit and a strong emphasis on ethics and innovation, Infosys is poised to lead the global discussion on responsible AI.

By Praveen Kona, Associate Research Director, Avasant