Server virtualization is becoming the norm in many data centers. In a shop where virtualization is well-established, system engineers can often satisfy a request for a new server by simply generating a new instance of the operating system (OS) instead of building a new physical server. Server virtualization can greatly reduce the cost of data center operations, as shown in our previous study, Server Support Staffing Ratios (see link at end of article).

However, when server virtualization is the norm, it becomes more difficult to normalize spending by server. It is no longer sufficient to use the number of physical servers as the basis for comparing data center spending. Rather, such metrics should be based on the number of server instances, regardless of the number of physical servers. The goal is to deliver an acceptable level of service at the lowest possible cost per server instance.

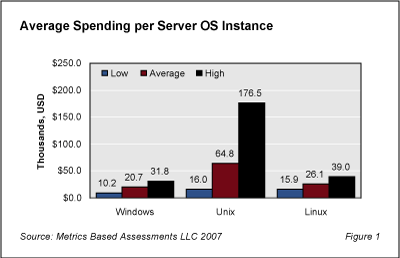

Based on data collected by our research partner, Metrics Based Assessments, Figure 1 shows the range of expected spending per OS instance for Windows, Unix, and Linux servers. Costs shown include the following:

- Data center hardware, excluding network hardware

- Operating system, utilities, database, and other infrastructure software (applications software costs are not included)

- Data center personnel

- Data center supplies, except for printer supplies

- Other data center costs, such as facilities, data center outsourcing, disaster recovery, offsite storage, and data center overhead

Based on these definitions, Windows has the lowest annual cost per system instance at $20,700. The average cost of a Linux instance is somewhat higher at $26,100. Unix has the highest average annual cost of operation at $64,800. The highest and lowest costs reported for each platform are also shown in Figure 1.

The metrics shown in Figure 1 provide a range of costs for each operating system that may be used to provide a rough benchmark for comparing an organization’s cost per server instance to the economic experiences of other organizations for that platform.

It is tempting to conclude from Figure 1 that Windows is the low-cost operating system, followed closely by Linux, and that Unix is more than double the cost of either operating system. However, this conclusion is not warranted. The main factor behind the differences in cost is the type of applications that execute on each platform. For example, among the survey participants, 20% of the Windows server instances are running e-mail, collaboration, file serving, and print services. These applications are not very support-intensive. On the other hand, approximately 30% of the Unix server instances are running high-end business applications, such as ERP or CRM systems, which require a higher level of system administrator support. Among the Linux OS instances, 27% are running such applications.

Total cost of ownership (TCO) calculations between Windows, Linux, and Unix are a hotly-debated subject. Each operating system has its advantages and disadvantages. A fair comparison between them can only be made in terms of a specific workload in a particular organization.

February, 2007

Statistics in this article were provided by Mark Levin, a Partner at Metrics Based Assessments, LLC, from data collected from data center benchmarking studies conducted over the past 12 months. The full set of metrics is available in his book, Best Practices and Benchmarks in the Data Center.