The comparison of AI’s evolution to Moore’s Law, as highlighted in Satya Nadella’s keynote at Microsoft Ignite 2024—with performance doubling every six months—underscores the transformative potential of AI across industries.

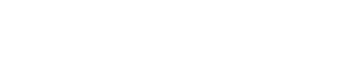

Clearly, major cloud platform providers are taking the lead in reshaping productivity paradigms and reinforcing AI’s critical role in addressing modern business challenges by literally owning the entire solution stack—from hardware AI chips to middleware support, including foundation models for generative AI (Gen AI), to enterprise applications. This trend has been depicted in Avasant’s Cloud Platform 2024 RadarView™.

Figure 1: Major cloud platform providers’ offerings across the AI stack

At the event, Nadella and his business leadership team weaved together several of these areas.

Building an Agentic World with Copilot

The vision for Copilot extends beyond immediate productivity gains to a broader transformation of business ecosystems:

-

- Adoption: Accelerating usage across organizations to realize value faster. For instance, at the Bank of Queensland Group, Copilot significantly reduced the time required for risk analysis and reporting from weeks to a day.

- Extensibility: Using Copilot Studio, create bespoke agents that streamline business processes and help meet unique business needs, from HR to supply chain management. Companies like Dow have built agents to optimize logistics, achieving significant cost savings and operational efficiency.

- Measuring Impact: Copilot Analytics ties usage to key business metrics, enabling organizations to quantify ROI and align AI initiatives with strategic goals.

Last month, Microsoft introduced more than 10 autonomous agents in Dynamics 365 to augment various processes, including supply chain, customer service, sales and marketing, and operations. In fact, it is leveraging the ecosystem to its advantage, as many of its partners have built their own agents and connectors in Microsoft 365 Copilot. This includes Adobe, Cohere, LinkedIn, SAP, ServiceNow, and Workday.

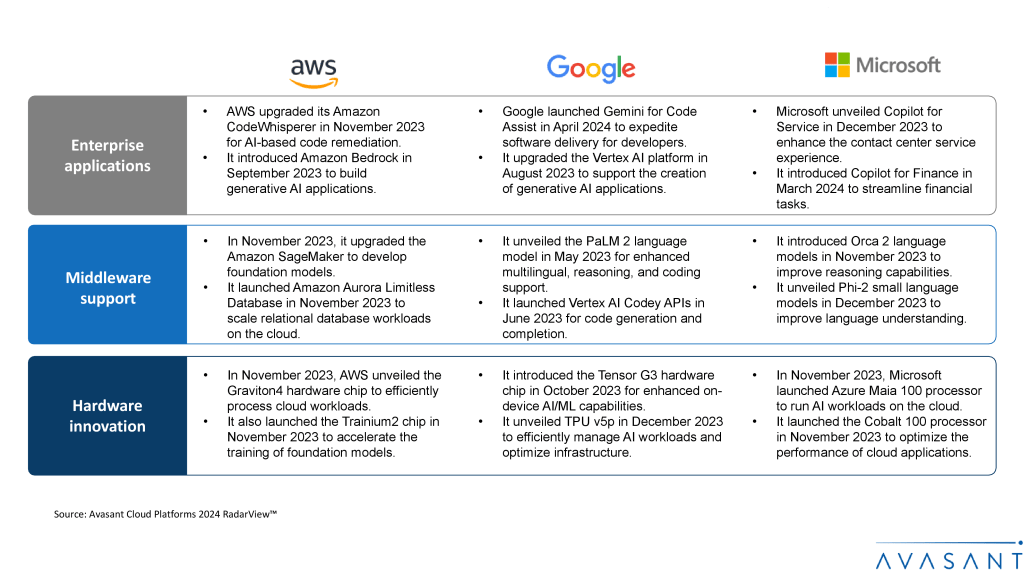

In our recently launched Cloud ERP Suites 2024 RadarView, we showcased how leading ERP vendors integrate Gen AI with their applications, which access business data to generate contextual insights across ERP operations, including finance, supply chain, and HR.

Figure 2: Cloud ERP vendors advance Gen AI with interactive agents for complex, multifunctional workflow automation

Despite multiple agents and third-party connectors, the interesting aspect is that all these are tightly integrated and available in Copilot, which acts as a UI to perform any action. This includes lead prioritization, client research, and personalized outreach without opening multiple applications. All this is enabled through Copilot, leading to faster and smarter decision-making.

Empowering Enterprises with Cloud-Native Autonomous Databases

Data is the backbone of AI workloads, providing the essential fuel for training models, generating insights, and driving decision-making across industries. As the demand for AI workloads increases, cloud platform vendors are enhancing their database offerings to handle the ever-increasing volume and data complexity. In March 2024, Oracle announced its Globally Distributed Autonomous Database, which enables customers to create cloud-native distributed databases and autonomously manage database patching, security, tuning, and performance scaling while addressing sovereignty requirements.

Microsoft has also embraced the trend by introducing the Fabric database at its Ignite 2024 event. This database brings its flagship SQL server’s capabilities native to the Fabric platform. It can support businesses managing batch and real-time transactional data while offering autonomous database provisioning services. With the launch of Microsoft’s Fabric database with SQL server integration, it would be interesting to see how this unified platform can empower businesses to manage vast datasets, apply AI at scale, and deliver automated insights in an increasingly data-driven world.

Developing Custom Hardware to Meet AI Workload Demands

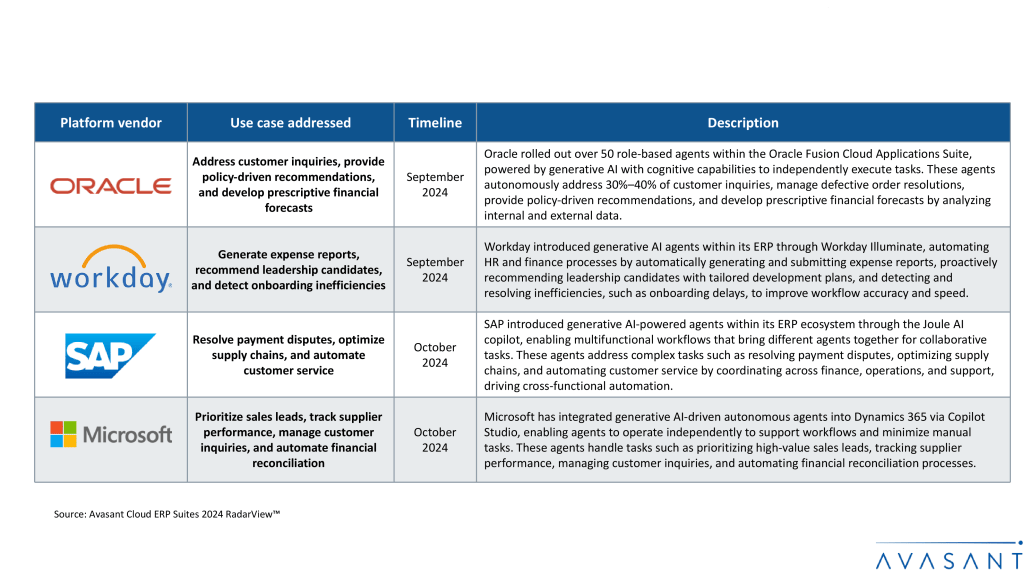

As enterprise interest in large language model (LLM) adoption continues to rise exponentially, the demand for robust Gen AI infrastructure is increasing due to diverse needs such as reduced power consumption, faster inferencing, affordable pricing, deployment flexibility, and specialized chips. This shift has prompted vendors to innovate, building and refining chip architectures for lower latency and enhanced energy efficiency.

This trend is not limited to cloud platform vendors. Service providers and Gen AI platform vendors are making equal strides in chip development and refinement, as showcased in our Generative AI Infrastructure Suite 2024 RadarView™.

Figure 3: The ecosystem continues to invest in augmenting its hardware capabilities

At the event, Nadella highlighted Microsoft’s commitment to continually focus on hardware innovation through a series of advancements in chip developments. He emphasized how the Cobalt 100 virtual machines, announced at the Ignite 2023, have benefited customers and partners such as Databricks, Siemens, and Snowflake in terms of 50% improvements in price performance. He also discussed how Microsoft leverages the Maia 100 AI accelerator internally to manage customer support workloads and empower Azure OpenAI inferencing capabilities.

This year, Microsoft unveiled two new hardware products, Azure Integrated Hardware Security Module (HSM), a cloud security chip within Azure servers that focuses on key management and encryption to enhance performance and security, and Azure Boost DPU, which runs cloud storage tasks with less power and improves the efficiency of data-centric workloads.

Additionally, the integration of NVIDIA’s Blackwell AI Infrastructure into Azure and co-engineering of Azure HBv5 chip with AMD showcase its collaborative initiatives to codevelop innovative hardware products. Other cloud platform providers, such as AWS, are also making significant strides in hardware co-innovation. In September 2024, AWS entered a multibillion-dollar partnership with Intel to develop custom AI fabric chips based on Intel’s 18A and Xeon 6 chips. These advancements signal a shift in the cloud landscape, where cutting-edge and co-engineered hardware chips are empowering organizations to run AI workloads with unparalleled performance and efficiency.

Conclusion

Microsoft’s advancements, from integrating AI-driven capabilities such as Copilot across its ecosystem to unveiling cutting-edge hardware and cloud-native databases, showcase its commitment to continuously innovate across the cloud stack in a rapidly evolving landscape. These initiatives can boost productivity, simplify decision-making processes, and unlock greater insights and value from data. Enterprises embracing these advancements can succeed and maintain a strong edge in today’s fast-paced AI-driven market.

By Dhanusha Ramakrishnan, Lead Analyst, and Gaurav Dewan, Research Director, Avasant