Following the cultural and technological explosion brought forth by the launch of OpenAI’s ChatGPT last fall, the security industry has seen enormous investment in technologies for large language models (LLMs), such as generative AI. While players like Google and Microsoft have launched new generative AI security products, IBM, CrowdStrike, and Tenable have integrated features into their existing products. Though each product has its own capabilities, the focal point is to automate threat hunting and prioritize breach alerts.

However, the rise of generative AI also includes the opportunity for threat actors. It has potentially lowered the barrier of entry for cybercriminals to develop sophisticated business email compromise phishing campaigns, find and exploit zero-day vulnerabilities, probe for critical infrastructure, create and distribute malware, and much more.

But isn’t that always the case? The hackers and security vendors are perpetually engaged in a cat-and-mouse game, and now generative AI has added a new dimension to it.

All said and done, in the grand scheme of things, generative AI had a positive rub-off on many organizations. The CIOs and CISOs have started to realize that from a security point of view, there’s no way in which they can keep pace and scale with the ever-evolving threat landscape without entrusting certain decision-making responsibilities to AI models. And generative AI pushes this case further.

Supercharging Security with Generative AI

The more progressive enterprises view this as a good opportunity to explore incorporating more AI into various threat classification processes and cybersecurity strategies.

In fact, the leading security vendors have taken steps to incorporate generative AI into their security systems, enhancing the intelligence of their automated incident response mechanisms. This involves exploring the following three use cases:

- Navigate, filter, and prioritize alerts that are received through the SIEM/SOAR platform.

- Create automated response workflows, thereby decreasing the reliance on Tier-3 or Tier-4 security operators and empowering Tier-1 or Tier-2 teams.

- Automate the assignment of security tasks to the most appropriate analyst based on skills, incident type, and threat level.

As expected, cloud service providers (CSPs) assumed a prominent role, utilizing their exclusive LLMs. The following examples provide an overview of this:

-

- Earlier this year, in March, Microsoft developed Security Copilot, an AI assistant driven by GPT-4. This assistant supports security experts by accomplishing two key tasks based on their prompts: recognizing signs of potential threats and summarizing details of security breaches. It harnesses Microsoft’s extensive collection of proprietary data and employs a specialized security model designed for security-related queries.

As a result, it not only enhances the speed of threat detection by reducing the time required for a response from hours or days to mere minutes but also aids in identifying potential security vulnerabilities that might be overlooked by other methods, thus addressing process gaps.

-

- During the RSA Conference in April 2023, Google unveiled the Google Cloud Security AI Workbench, which is constructed using the security LLM, Sec-PaLM 2. This platform conducts contextual analysis and decrypts potentially harmful scripts, enhancing understanding of the threat environment.

It aids Tier-1 security operators in simplifying the interpretation of threat intelligence findings. It also enables Tier-2 and Tier-3 security operators to concentrate on threat analysis through advanced capabilities such as iterative query and multivariate anomaly detection.

While the specialized security providers integrated generative AI capabilities into their security platforms, primarily to enhance their risk assessment and threat hunting processes; for instance:

-

- In May 2023, CrowdStrike, popularly known for its Falcon platform that offers endpoint detection and response, threat intelligence, and threat hunting functionality, introduced a new feature called Charlotte AI. This feature utilizes generative AI to address their queries in natural language, expediting the identification, investigation, hunting, and resolution of cybersecurity threats. In a way, this innovation helps narrow the expertise gap and enable Tier-1 and Tier-2 security personnel to effectively manage complex Tier-3 and Tier-4 level inquiries.

- Tenable, known for its exposure management platform Tenable One, provides visibility to the security teams into the entire attack surface. In August 2023, the company integrated a generative AI engine, called ExposureAI, with its platform. This feature expands its data repository, encompassing over one trillion distinct exposures for identifying vulnerabilities, misconfigurations, and identities within IT, public cloud, and OT environments.

The main areas of improvement include the ability to search and analyze assets and exposures across various environments, offer guidance for mitigation, and provide actionable insights and recommendations for addressing potential security threats.

Furthermore, companies such as Palo Alto Networks and SentinelOne are creating their exclusive LLMs to enhance their abilities in threat detection and prevention.

Dealing with Cybersecurity Skill Gaps

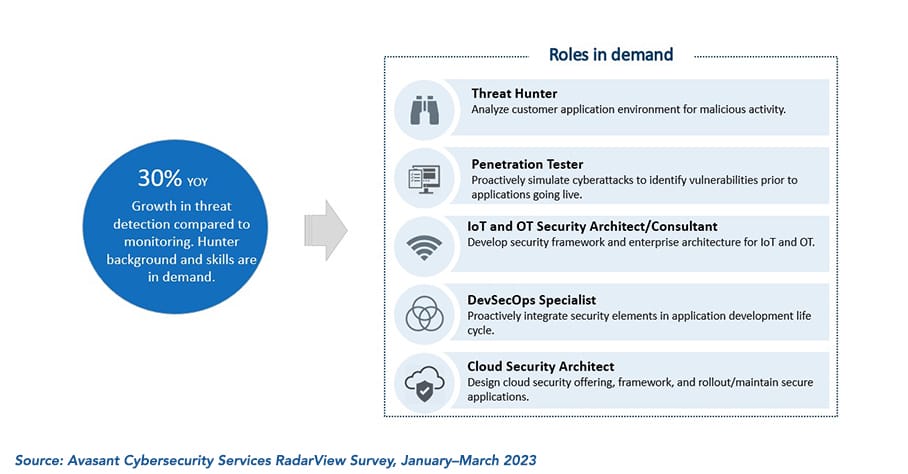

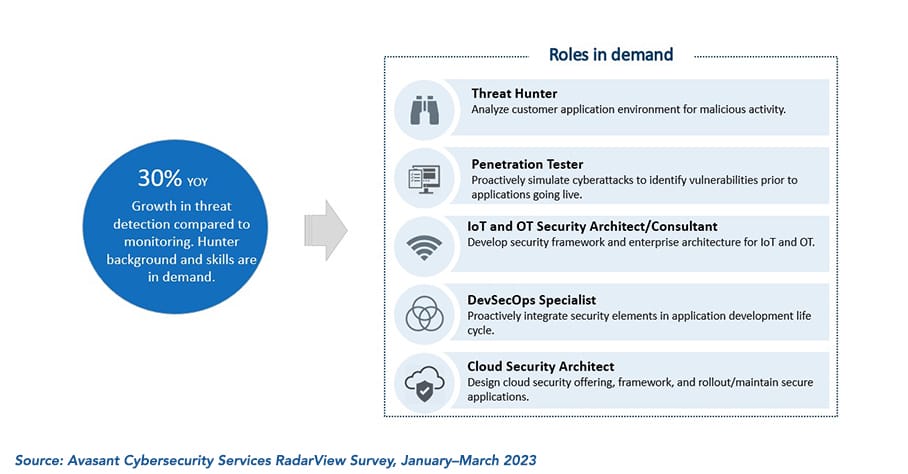

This holds even greater significance as the cybersecurity sector grapples with an ongoing shortage of skilled professionals. According to our Avasant Cybersecurity Services 2023 RadarView January–March 2023 Survey, service providers expanded their cybersecurity teams by an average of 30%.

Figure 1: Service providers are doubling down their cybersecurity workforce

However, this number still falls short of the industry’s need of 3.4 million cybersecurity experts globally, as indicated by research from the International Information System Security Certification Consortium (ISC2), an association for cybersecurity professionals.

According to the Avasant survey, there is a stronger demand for professionals with expertise in threat hunting than those in threat monitoring. This is where security providers can leverage generative AI capabilities to address the shortage of skilled personnel. The use of automation and AI can empower the reduced resource team to perform more efficiently.

Although humans cannot be entirely eliminated from the incident response process, generative AI can significantly automate a large proportion of the less valuable tasks typically performed by analysts. This, in turn, can enable analysts to focus on genuine positive cases and other crucial security responsibilities.

Double-edged Sword

Similar to any digital technology, generative AI has its drawbacks. When not used cautiously, there is a continuous risk of data exposure.

The recent instance where Samsung Semiconductor staff utilized ChatGPT to transform meeting notes concerning their hardware into a presentation and to optimize source code for enhancing the test sequence to identify faults resulted in confidential data being exposed on OpenAI’s servers. Because of this incident, Samsung has decided to develop its own AI system for internal purposes.

Secondly, generative AI’s capacity to produce deceptive yet incredibly realistic audio and video materials provides cyber criminals with a means to bolster their phishing campaigns and carry out more successful social engineering tactics. A noteworthy instance is the fabrication of a false image depicting the Pentagon exploding in May 2023, generated through a text-to-image AI tool. This incident caused the S&P 500 index to plummet by 30 points, resulting in a market capitalization shift of $500 billion.

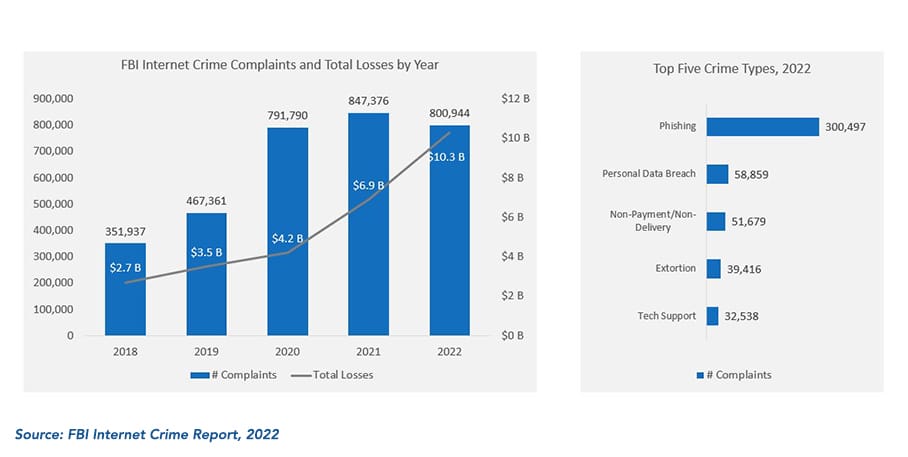

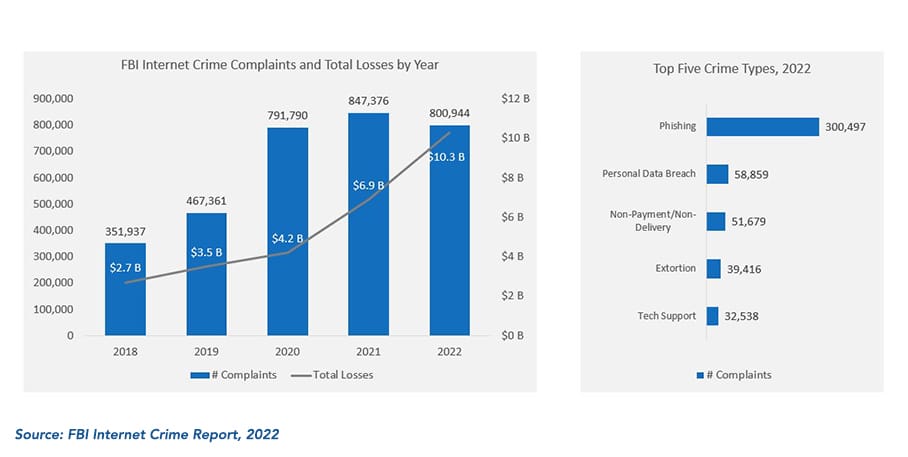

The frequency of phishing attacks is rising, as indicated by over 3 million complaints reported in 2022, according to the FBI Internet Crime Report in March 2023.

Figure 2: Phishing continues to be the preferred mode of cyber attack

In 2022, total losses of over $10 billion were recorded due to internet crime. Though there is a 5% YOY decline in the number of complaints reported in 2022, the total losses have grown from $6.9 billion in 2021, implying that the average loss per complaint increased by 50% during 2021–2022.

The Perpetual Cat-and-Mouse Contest

While it’s always a challenge to ascertain the winner in the never-ending cat-and-mouse contest, with generative AI, the stakes are higher than ever. Generative AI is enabling both sides to do more with less resources and investment, but for the enterprise, that is typically additive to all the traditional security services until such technologies reach a point of market maturity where they can potentially replace, not just augment, existing security practices.

LLM-based systems are definitely not a silver bullet. But as with any technology, there are trade-offs to consider. With constantly evolving regulations, the stakes are higher than ever. However, it remains crucial to keep pace with the technology and embrace it by investing in advanced security tools and platforms designed to secure AI.

By Gaurav Dewan, Research Director, and Mark Gaffney, Senior Director, Avasant