Generative AI has seized the imagination of users across industries. What sets it apart is its capacity to democratize AI, bridging the gap between tech and non-tech individuals in comprehending its applications. Its utilization is poised for exponential growth as more use cases are explored.

However, like any digital technology, generative AI adoption is a double-edged sword, offering great potential for innovation and creativity while raising significant concerns regarding security, trust, and governance. The expanding risk landscape becomes apparent as enterprises increasingly experiment with generative AI solutions to optimize their operations.

Now, the dichotomy is that with the ever-evolving threat landscape, the CIOs and CISOs recognize the inability to keep pace with emerging risks solely through traditional security measures. Consequently, there’s a growing realization that certain decision-making responsibilities must be entrusted to AI and generative AI models.

Yet, this transition has its complications. Generative AI inadvertently lowers the barrier of entry for cybercriminals. It opens avenues for developing sophisticated cyberattacks, including business email compromise phishing campaigns, exploitation of zero-day vulnerabilities, probing critical infrastructure, and the proliferation of malware.

Interestingly, our recent paper, “Generative AI-Based Security Tools are, in Effect, Fighting Fire With Fire,” underscores the need for a nuanced approach in deploying generative AI for cybersecurity purposes. While generative AI holds immense promise in bolstering cybersecurity defense, its deployment requires careful consideration of the associated risks and proactive mitigation strategies.

Supercharging Security with Generative AI

At the Microsoft AI Tour Mumbai event on January 31, 2024, Vasu Jakkal, corporate vice president of Microsoft Security, Compliance, Identity & Privacy, took the stage following Microsoft President India and South Asia Puneet Chandok’s keynote address, emphasizing Microsoft’s commitment to addressing critical concerns surrounding security, trust, and governance in generative AI.

Over the past year, there have been significant investments in large language models (LLMs) for security applications, both by leading cloud service providers and managed security service providers. While players like Google and Microsoft have launched new generative AI security products, IBM, CrowdStrike, and Tenable have integrated features into their existing products. Though each product has capabilities, the focal point is to automate threat hunting and prioritize breach alerts.

At the event, Vasu highlighted how Microsoft Security Copilot, an AI assistant-driven security platform powered by GPT-4, leverages Microsoft’s extensive security library of solutions, such as Microsoft Defender and Sentinel, and proprietary data to enhance threat detection speed and identify vulnerabilities, addressing process gaps overlooked by other methods. Additionally, it summarizes security breach details based on prompts.

Scott Woodgate, senior director of Microsoft Security, took this thought forward and highlighted the following key aspects while effectively addressing AI-specific risks and ensuring the secure development of AI applications:

-

- Understand the use of AI: Microsoft Defender offers users visibility into the usage of generative AI apps and the associated risks, enabling better management of assets.

- Insights about the data: To safeguard data, Microsoft Purview’s solutions provide insights into what sensitive data flows into the AI engine.

- Governance: Microsoft’s comprehensive security portfolio empowers organizations to govern AI effectively through tools like Microsoft Copilot, Microsoft Purview, and third-party generative AI solutions. These solutions include compliance controls within Copilot, streamlining adherence to business and regulatory requirements. For instance, with Purview’s natively integrated capabilities, the platform can detect policy violations within Copilot prompts or responses, reinforcing compliance standards.

Clearly, governance stands as the cornerstone of these efforts, ensuring that AI applications are developed and deployed in a secure and compliant manner.

Trust Nobody to Protect Everybody

The strategy outlined by Vasu and Scott for generative AI-based security solutions resonates with prevailing industry trends in adopting security solutions aligned with the Zero Trust principles. These principles are foundational in IT infrastructure modernization and enable enterprises to treat all traffic as potential threats and enforce the principle of least privileges (POLP).

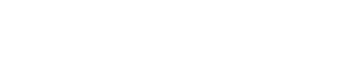

In our Avasant Cybersecurity Services 2023 RadarView, published in July 2023, we highlighted three key considerations for enterprise customers adopting a zero-trust strategy:

-

- Value realization: Enterprises seek to integrate security tools and platforms comprehensively to address attack vectors in a converged fashion rather than in isolation.

- Asset discovery: The discovery of assets is considered a critical aspect of augmenting the zero-trust journey in an enterprise. The visibility of assets, such as applications, data, and enterprise systems, vis-à-vis their business criticality helps to focus on defining security policies.

- Visibility of identities: Identity protection and application of least privilege policies, context-based continuous verification, and just-in-time access are critical to the maturity of the Zero Trust framework.

Figure 1: Percentage of enterprises prioritizing the key Zero Trust considerations

The figure above illustrates the percentage of enterprises prioritizing these three considerations, emphasizing the importance of taking a holistic view of the Zero Trust framework across various attack vectors: users, devices, data, network, infrastructure, and applications.

While generative AI offers promising capabilities, it supplements existing security measures rather than fully replacing them until they mature sufficiently in the market.

As enterprises scout for generative AI security solutions, it’s important to recognize that LLM-based systems are not a panacea. Like any technology, they come with their own set of trade-offs. With regulatory landscapes constantly evolving, staying abreast of technological advancements is more critical than ever. Despite the risks, organizations must embrace these advancements and invest in advanced security solutions tailored to safeguard AI systems.

By Gaurav Dewan, Research Director, Avasant