Generative AI is one of the most hyped technologies in the current AI landscape, and rightly so. It brings tremendous efficiency gains and data monetization opportunities to the table. Although the large language model (LLM), the foundation of generative AI, has been around since the inception of transformer models in 2017, its adoption has skyrocketed with user-friendly and intuitive no-code platforms such as ChatGPT. But with great power comes great responsibility. The rapid advancement of generative AI has heightened societal risks and dilemmas, including racial or social biases, misinformation, ethical and transparency concerns, and data privacy threats.

The threat is real!

Figure 1: The most prominent risks and challenges enterprises face with generative AI.

-

- Data privacy: Generative AI models, trained on vast public datasets, may contain sensitive information, potentially leading to tracking, targeted advertising, or impersonation of individuals. In June 2023, OpenAI admitted to a leak of 100,000 ChatGPT account credentials on the dark web. Similarly, in April 2023, Samsung’s employees accidentally leaked confidential company information to ChatGPT.

-

- Ethical bias: Generative AI models can exhibit biases from their training data. For instance, in June 2023, Bloomberg revealed gender and racial bias in the Stable Diffusion Generative AI model, which generated predominantly male and lighter-skinned images for certain professional keywords.

-

- Hallucinations and inaccurate responses: Generative AI models can produce responses unrelated to any real data or training patterns. Such responses are known as hallucinations. For instance, in June 2023, legal sanctions of USD 5,000 were imposed on a US lawyer for providing fabricated case briefs created by ChatGPT. Similarly, a radio jockey sued OpenAI for defamation because of false information generated by ChatGPT about him.

-

- Intellectual property (IP) misappropriation: Generative AI’s use of copyrighted text, images, media content, and computer code has raised legal issues. For example, in January 2023, Getty Images sued Stability AI for illegally using 12 million images to train its image-generation system. Similarly, in August 2023, many newsrooms such as CNN, The New York Times, and Reuters inserted code into their websites restricting OpenAI’s web crawler, GPTBot, from scanning their platforms for content

-

- Environmental impact: The infrastructure required to train LLMs, the foundation of generative AI, results in a high carbon footprint, water consumption, and greenhouse emissions. For instance, according to Stanford’s AI Index report 2023, training the 175 billion parameter model BLOOM consumes enough energy to power the average American home for 41 years.

-

- Cyberattacks: The misuse of generative AI for malicious activities, such as creating computer viruses or exploiting security vulnerabilities, is a growing concern. For instance, in July 2023, hackers used the WormGPT generative AI tool to launch phishing attacks.

Ethics, fairness, and explainability are long-standing issues in AI, typically managed through a responsible AI framework. Although C-level discussions frequently address responsible AI, its implementation has been slow due to the perceived lack of immediate financial impact. It is often seen as a cost rather than an investment. However, with the advent of generative AI, ignoring AI ethics is no longer an option. Responsible AI, addressing language toxicity, output bias, and other such issues, will be central to all generative AI discussions.

The new risks posed by generative AI around data protection, copyright, and cybersecurity are critical and require immediate attention. Data privacy and security have emerged as the most significant barriers for enterprises adopting generative AI and moving projects from the experimentation stage to production. The biggest challenge enterprises face is the risk of uploading data, such as the source code or even sales figures, to a chatbot that might learn from such proprietary information and retain or share it outside the company or with employees lacking proper authorization. This is particularly acute in heavily regulated industries like healthcare and banking, where data protection is paramount and reputations are at stake. As a result, many enterprises are proactively incorporating security guardrails to prevent data leakage, toxic or harmful content, code security vulnerabilities, and IP infringement risks when using generative AI platforms. This has led to the emergence of new features in security solutions, such as the following:

-

- Tracing data from its origin to classify and protect it accurately wherever it goes.

- Understanding the context of a prompt to apply policy-related controls to conversations with an LLM in real time.

- Measuring a generative AI model’s performance against guidelines from policymakers, such as the National Institute of Standards and Technology, to assess its security, privacy, and IP handling practices.

Concerning the high environmental impact of LLMs, various techniques are being evaluated to develop more economical and faster LLM models while reducing their environmental footprint. For instance, LiGO (linear growth operator) is an innovative technique developed by MIT researchers to minimize the environmental impact of training LLMs by cutting the computational cost by half. It works by initializing the weights of a larger model using those of smaller pretrained ones, facilitating efficient neural network scaling. This not only maintains the performance benefits of larger models but also requires substantially reduced computational cost and training time compared to training a large model from scratch.

Establishing guardrails will be crucial for mitigating the risks of generative AI

The path ahead for generative AI is anything but certain. The pivotal question is whether the journey of generative AI will parallel that of technologies like self-driving cars, which, despite groundbreaking advancements and heavy investments, face a myriad of technical, regulatory, and ethical challenges to real-world deployment. Some of the efforts described below reflect a growing awareness and proactive approach towards managing the risks associated with generative AI across tech giants, enterprises, and governments.

Tech giants

-

- In July 2023, seven technology firms—Google, Meta, Amazon, Anthropic, Microsoft, OpenAI, and Inflection AI—agreed to enable voluntary standards for safety, security, and trust to manage the risks associated with generative AI.

- In July 2023, Plurilock launched an AI-enabled cloud access security broker solution to prevent the transfer of sensitive data to generative AI systems.

- In June 2023, Meta initiated a Community Forum on generative AI to gather feedback on desired AI principles.

- In May 2023, OpenAI announced a USD 1 million fund for ideas and frameworks addressing generative AI governance, bias, and trust issues.

- In April 2023, Nvidia introduced the NeMo Guardrails to develop safe and trustworthy LLM conversational systems.

Enterprises

-

- In August 2023, TikTok implemented new rules requiring users to tag AI-generated content or risk its removal by moderators.

- After a data leak due to ChatGPT, Samsung developed guardrails and policies for generative AI system usage.

- In April 2023, Snapchat introduced guardrails, including an age filter and interaction insights for parents, to monitor generative AI chatbot engagements with teenagers and kids.

Governments

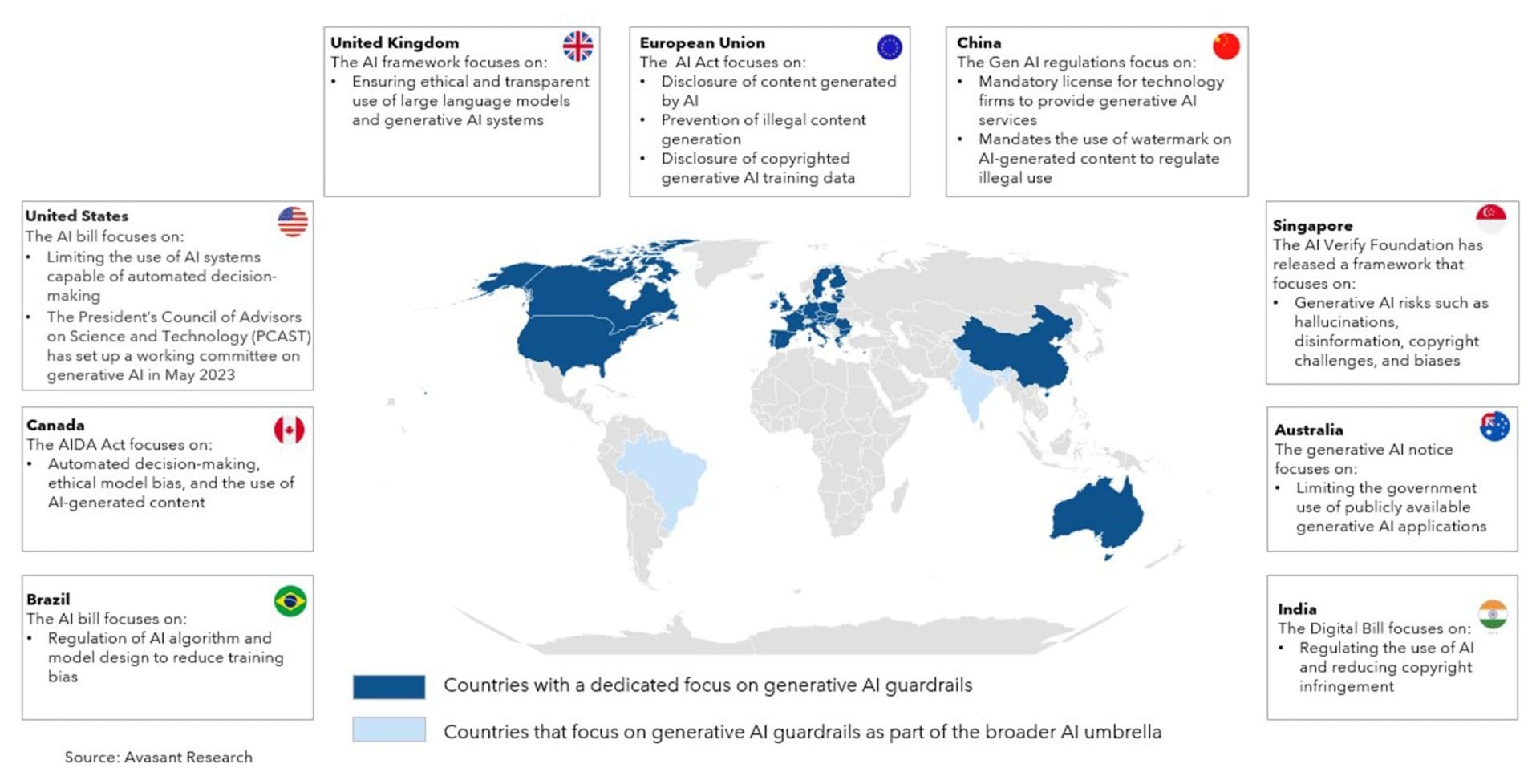

Many countries, including the United States and China, and the countries under the European Union, have introduced bills and regulations monitoring generative AI usage. These address copyrighted content generation and transfer, AI content watermarking, and automated decision-making backed by ethical AI.

Figure 2: Most countries are developing guardrails to minimize risks associated with generative AI.

The EU AI Act is currently in its final stages of deliberation. If enacted in its current form, this act will lead to strict conformity standards that all parties—providers, deployers, and users—must adhere to, as there will be no risk transfer to anyone. Meanwhile, in the US, regulations vary by state, with California focusing on online transparency to prevent the use of hidden bots in sales and elections and Texas implementing a data protection and privacy act. As these regulations become more stringent, companies will be forced to take responsibility across all facets of AI, not just generative AI. This highlights a global trend towards increased accountability and regulation in the AI sector.

Furthermore, enterprises should remain flexible regarding the use of hyperscalers and on-premises solutions. The myriad use cases for generative AI, combined with the evolving landscape of data protection, processing, and storage laws, may restrict the use of certain hyperscalers and necessitate on-premises solutions. This will likely result in a hybrid generative AI strategy, combining both on-premises and cloud-based solutions. To prepare for this eventuality, enterprises should design adaptable architectures capable of switching between different compute types, whether using a hyperscaler, a SaaS provider, or an on-premises solution.

What must enterprises do?

Generative AI is quickly becoming a competitive necessity for enterprises. Those who delay its adoption risk falling behind or going out of business. As regulations and reforms are still underway, these proactive steps will help enterprises leverage the benefits of generative AI while minimizing its associated risks.

Seek data protection assurances: Initially, companies hesitated to adopt cloud services until AWS received CIA approval in 2013, significantly boosting trust and adoption. Similarly, Azure OpenAI recently received US government approval and announced new guidelines guaranteeing data security during transit and storage, confirming that prompts and company data are not used for training. This endorsement will likely reduce resistance to adopting Azure OpenAI and, over time, solutions by other LLM providers. As the landscape evolves, using generative AI products will pose data security risks similar to using SaaS products. Adopting generative AI should be relatively easy for companies already comfortable with SaaS platforms. Alternatively, companies can opt for on-premises, open-source models, which may be more costly but preferred by sectors like finance and insurance that are traditionally reluctant to send data outside their network.

Implement content moderation and security checks: Enterprises must ensure that any LLM model they use, whether closed, open, or a narrow transformer, undergoes comprehensive security checks. These include protection against prompt injection, jailbreak, semantic, and HopSkipJump attacks and checks for personally identifiable information, IP violations, and internal policies. These checks should be mandated from when a user inputs a prompt until the foundation model generates a response. Similarly, the output should undergo the same checks before being returned to the user. Technical components like scanners, moderators, and filters will further help secure and validate the information. Context-specific blocking, data hashing before input, and building exclusion lists like customer logos, personally identifiable information, and so on will also be essential for data preparation.

In the realm of generative AI, it is imperative that companies collaborate with service providers possessing specialized expertise. Given the unique challenges posed by generative AI, from creating content compliant with regulations to ensuring the generated data respects privacy standards, partnering with knowledgeable providers is crucial. Such experts can navigate the intricacies of data privacy and content moderation specific to generative AI, ensuring regulatory compliance and ethical content generation.

By Chandrika Dutt, Research Leader, Avasant and Abhisekh Satapathy, Senior Analyst, Avasant