Introduction

Generative AI has steered into a new era of content creation, enabling individuals to quickly and easily generate vast amounts of content This phenomenon has resulted in an explosion of content across various digital platforms, such as social media or other sites that accept user-submitted content. For instance, around 15, 20, and 11 GB of data traffic was generated per smartphone, mobile PC, and tablet per month, respectively, in 2022, and is projected to reach 46, 31, and 27 GB per month by 2028.

However, the rapid growth of content also poses challenges for publishers in moderating user-submitted content. Traditional content moderation tools primarily rely on flagging keywords or tags that indicate potentially problematic content, such as hate speech or harassment. But generative AI systems today can produce much better content in terms of the context of a conversation, appearing much more human-like and avoiding keywords that would otherwise flag it for moderation. Consequently, there is a pressing need for content moderation solutions that can effectively navigate the complexities of this evolving landscape.

There are two angles to look at to see the impact of generative AI on content moderation: as a driver of the need for content moderation and as a tool to increase the productivity of content moderation. This report explores the trends, challenges, and potential solutions in the field of content moderation for AI-generated content.

The Need for More Robust Content Moderation Services

The advent of generative AI has sparked remarkable growth and demand for content moderation services. The accessibility and simplicity of self-generated content have become fundamental drivers of the rise in the volume of content created. Several trends and insights have emerged in this domain, acting as a driver of the need for more and better content moderation.

First, the volume of content being created by individuals is skyrocketing, with more than 63% of the world’s population having access to the internet and around 60% of them on Facebook, generating about 15% of the content in the form of videos. This has also led to an increase in social media usage, driven by factors such as the rise in video generation, evolving consumption patterns, and the emergence of the metaverse. The accessibility and simplicity of self-generated content have become fundamental drivers of this trend.

Secondly, AI-generated content is getting better and becoming more difficult to distinguish from human-generated content. It can span multiple languages, create plausible variations of human speech, and can more easily avoid basic keyword or tagging-based content moderation tools.

Existing keyword-based moderation tools fall short of flagging content that is problematic in its overall message but does not use any offensive keywords. This highlights the critical need for effective content moderation services tailored to the sophistication of AI-generated content.

Another problem is that on some content platforms, such as a healthcare community website, for example, factual accuracy is critical. In such cases, moderation needs to be able to flag content that may need additional fact-checking. With generative AI systems flooding such sites with content and their tendency to sometimes “hallucinate” or “make-up facts” the job of moderation becomes much more difficult.

Current Content Moderation Models

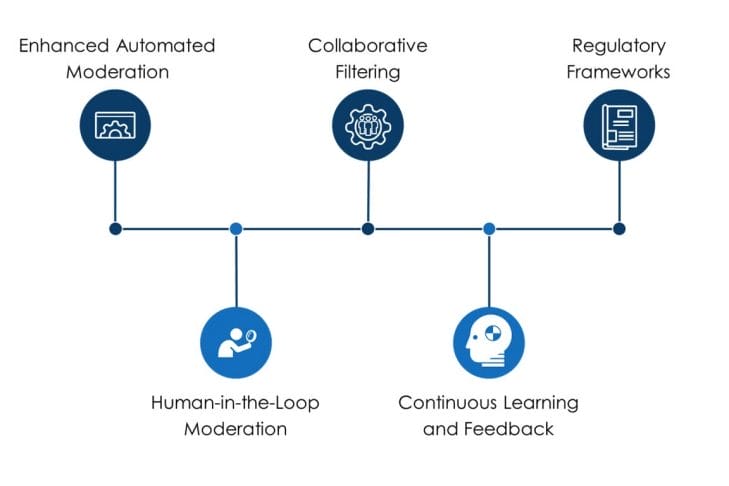

At this time, content moderating companies typically follow a three-step model with the addition of a feedback loop to tackle generative AI content. The process involves:

-

- Step 1: Automated Moderation – Content undergoes automated moderation using keyword-based and basic image recognition AI systems to flag potential issues based on predefined rules and patterns.

- Step 2: Human Moderation – Human moderators review and moderate the output from the automated moderation system, possessing an understanding of the context of the conversation.

- Step 3: Feedback Loop – Human moderators provide feedback and insights based on their moderation decisions to improve the performance of the AI-powered system utilized in Step 1 over time.

Although future advancements may incorporate more advanced contextual AI systems in the first step, we expect human moderation to remain pivotal due to the complexity and contextual nuances of AI-generated content.

Differences between Moderation of Generative AI Content vs. Traditional Content

Moderation for generative AI content differs from traditional content moderation in several key aspects:

-

- Contextual understanding: Generative AI content is getting better and better at understanding context-specific information, cultural references, and subtle nuances. This can be a challenge for automated systems to spot problematic content. Human moderators are critical in deciphering the context and making informed moderation decisions.

- Adaptability: Generative AI models continually evolve and improve over time, necessitating flexible and adaptable content moderation systems. Regular updates and retraining of moderation models are imperative to ensure effectiveness.

- Ethical considerations: Generative AI content raises ethical concerns regarding privacy, consent, and plagiarism. Content moderation processes for generative AI must incorporate considerations for these ethical dimensions and adhere to relevant regulations and policies.

Potential Solutions and Innovations in Content Moderation

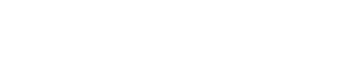

Several potential solutions and innovations are emerging to address the challenges associated with content moderation for AI-generated content:

-

- Enhanced Automated Moderation: Advancements in AI technologies, such as natural language processing and computer vision, can improve the ability of automated moderation systems to understand context and intent. This includes developing models specifically trained on generative AI content to more accurately identify potential issues.

- Human-in-the-Loop Moderation: Combining human judgment with automated systems can enhance the accuracy and efficiency of content moderation. Human moderators can provide guidance and context-specific knowledge to AI systems, refining the automated moderation process.

- Collaborative Filtering: Building collaborative filtering mechanisms that leverage community feedback and user reporting can assist in identifying problematic or harmful generative AI content. Twitter’s Community Notes feature is one example. This approach allows users to participate actively in moderation, creating a safer and more inclusive environment.

- Continuous Learning and Feedback: Establishing a feedback loop between human moderators and automated systems is crucial for ongoing learning and improvement. Human moderation decisions can be used to train and refine automated moderation models, making them more effective over time.

- Regulatory Frameworks: Developing comprehensive regulatory frameworks that address the unique challenges posed by generative AI content can provide guidance and accountability for content moderation practices. These frameworks should consider factors such as privacy, consent, ownership, and responsible use of AI.

The Role of Service Providers in Generative AI Content Moderation

Outsourcing providers possess several competitive advantages in generative AI content moderation services:

-

- Scalability: Their ability to operate at scale allows them to efficiently handle large volumes of work across various industries. The scalability of outsourcing companies positions them favorably to tackle the increased demand for content moderation services resulting from the proliferation of AI-generated content.

- Training and reskilling: Outsourcing companies have extensive experience in conducting training and reskilling programs. This enables them to rapidly train and upskill human moderators to effectively handle the unique challenges associated with moderating generative AI content.

- Software development and management: Outsourcing companies also possess strong software development capabilities, allowing them to develop and maintain in-house and partner software tools that bridge the gap between keyword-based moderation and generative AI-based moderation.

These delivery expertise, cost focus, and talent-sourcing capabilities of service providers further contribute to their competitive advantages in generative AI content moderation services.

Using AI to Moderate Generative AI Content

In summary, moderation for AI-generated content presents new and complex challenges that traditional keyword-based moderation tools struggle to address. Outsourcing companies with scalability, training and reskilling programs, and software development expertise are good choices for providing moderation services for generative AI content. However, effectively moderating generative AI content requires a combination of automated moderation, human judgment, continuous learning, and new moderation tools that themselves use generative AI. By embracing innovative solutions, considering ethical dimensions, and leveraging the strengths of outsourcing companies, moderation for generative AI content can pave the way for safer and more inclusive digital environments.

Aditya Jain, Research Leader